blade suggested this paper for suing slot attention as a loss for lcm let’s just get in https://arxiv.org/pdf/2006.15055

didn’t understand shit

(the paper is very old so i am not sure on it’s effectiveness lol)

didn’t understand shit

(the paper is very old so i am not sure on it’s effectiveness lol)

this is just like a small module to calculate in between it breaks down the given n input into sets and then use them to do something idk

slot attention module

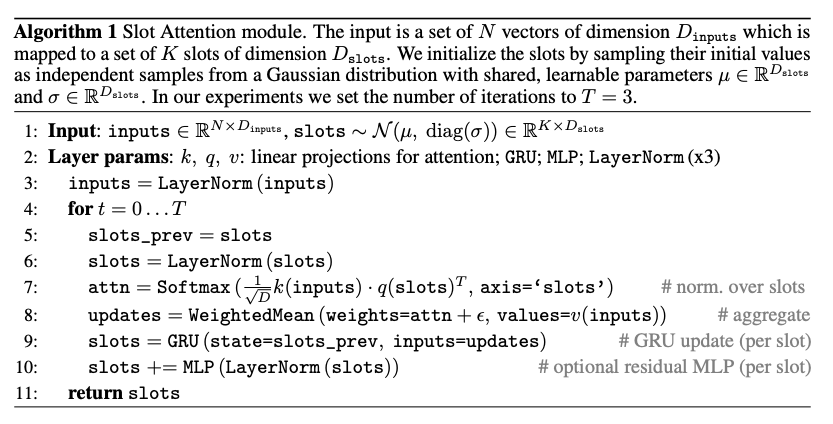

given vector N it breaks it down into sets of vector K these are fixed number each is a slot the slot actually learns a strategy for finding unique features for each new input over the iterations. this is done by the attention module competing in each slot

it starts of by giving each slots it’s values it randomly pulls this from a gaussian distribution where the mean and varience are learnable parameters (during training time fixed value during test time). this initialized of the slot vectors happens for each new input in both training and testing.

after this

For T iterations (e.g., T=3):

For T iterations (e.g., T=3):

- Normalization (Optional but often used): Input features and current slot values might be layer-normalized.

- Attention Calculation:

- Slots generate “queries” (q(slots)).

- Input features generate “keys” (k(inputs)).

- Attention scores are computed (e.g., dot product between queries and keys).

- These scores are normalized across slots (softmax over the slot dimension). This creates competition: slots compete to “explain” or attend to parts of the input. This results in an attention weight matrix (attn).

- Weighted Aggregation of Input Values:

- Input features also generate “values” (v(inputs)).

- The attention weights are used to compute a weighted mean of these input values for each slot. This gives each slot an “update” vector derived from the parts of the input it attended to.

- Slot Update (Recurrent):

- Each slot’s current vector is updated using its previous state and the new “update” vector, typically via a Gated Recurrent Unit (GRU).

- Optional MLP + Residual: The updated slot vector might be passed through a small Multi-Layer Perceptron (MLP) with a residual connection for further refinement.

i actually have so many problems with this first of all how does it do the query calculation for an input convert it into n number of slots and then multiply key and then handle value that seems like a lot it would have to increase a fuck ton of calculation for each input token so the fucking paper is actually using CNNs for features of each image and they just pass all the features as inputs and calculate slots and attention etc for each one of them ??? i have been thinking of this as an LLM paper when it’s not i gotta think in terms of CNNs (fuck me)