https://arxiv.org/pdf/2507.16003

let’s see i already know the overview is that prompts teach but what new insight can it give us. paper is from google research so that is cool.

basic idea that they give in the abstract is that if you stack an MLP after the attention block it

imprints the prompt and what change it made onto the mlp.

ok wow

ok wow

so it’s not exactly imprinting the context but like a weight update of rank 1 on the first layer, now this is not directly “imprinting” but with some tecnique we can interpretibility this shit hmm hmmm

maybe using a vector model.

starting with they define a contextual layer that given an input performs the function or with context we get . self-attention is a type of contextual layer because it builds the context. when performing the function it gives the output based on both the context and the input with respect to the last token so we can get the update using

∆A(C) := A(C,x)−A(x) \tag{1}

basically what change the function A got with respect to with and without the context

so it’s not exactly imprinting the context but like a weight update of rank 1 on the first layer, now this is not directly “imprinting” but with some tecnique we can interpretibility this shit hmm hmmm

maybe using a vector model.

starting with they define a contextual layer that given an input performs the function or with context we get . self-attention is a type of contextual layer because it builds the context. when performing the function it gives the output based on both the context and the input with respect to the last token so we can get the update using

∆A(C) := A(C,x)−A(x) \tag{1}

basically what change the function A got with respect to with and without the context

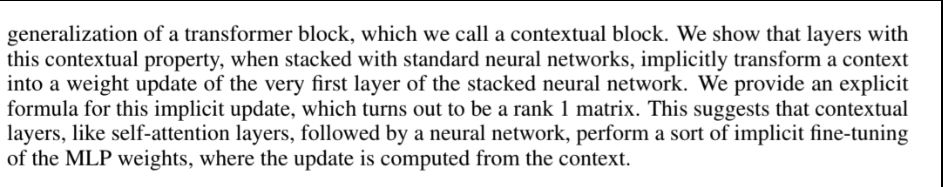

then they give their first definition of how contextual block works so a normal attention block is

flowchart LR Normal transformer block (left) subgraph Normal_Block direction TB X1["Input token x"] --> SA["Self-Attention"] SA --> D1["Dense Layer<br/>(W z + b)"] D1 --> R1["Activation + Rest of MLP (fθ)"] R1 --> OUT1["Output"] end

so the main difference is just that in the first layer of the FFN after the self attention layer we do the implicit update (the that we found after the diff between in eq.1) so we get

where = , is the first layer of the FFN after self-attention and are the rest of the connections layers and activations. this later proves due to the context there is an implicit weight update happening in the subsequent FFNs like and even if done manually it yields the same results. where the final equation we get is :

where

and then there’s a proof for it

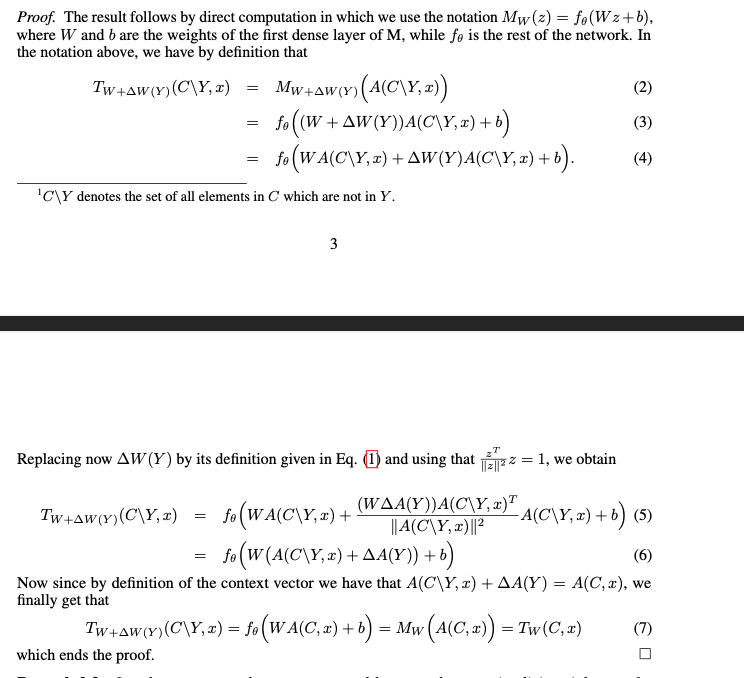

with full context where the update formula looks like

with full context where the update formula looks like

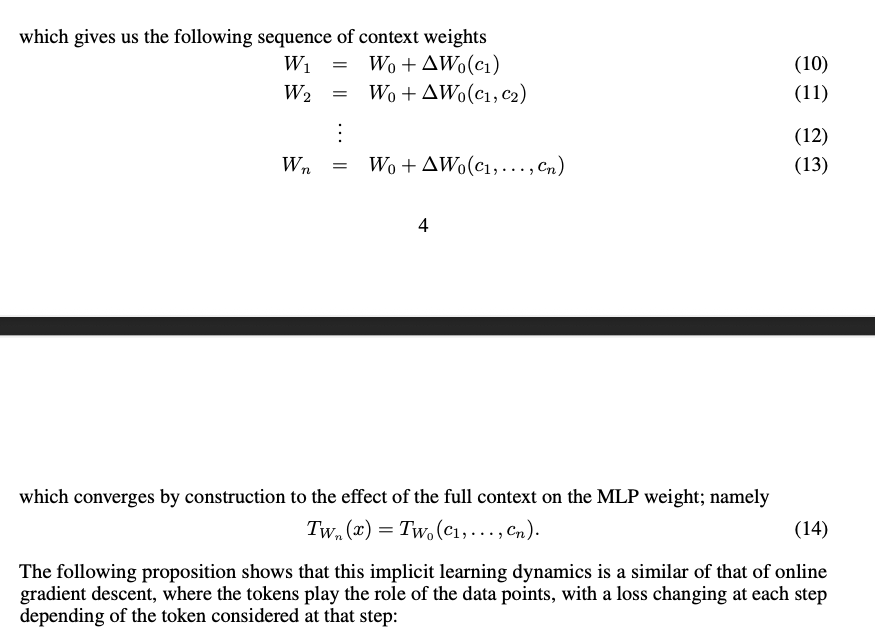

implicit learning dynamics of icl

after calculating the changes according to the increasing tokens from the context so say the context has it gives incremental updates which acording to them looks like an online gradient descent update. later tells us that the difference that we get in is basically the loss or gradient for the update (refer to eq1 if needed)

later tells us that the difference that we get in is basically the loss or gradient for the update (refer to eq1 if needed)